- About

- Academics

-

Undergraduate Programs

- Civil and Environmental Engineering

- Architecture and Architectural Engineering

- Mechanical Engineering

- Industrial Engineering

- Energy Resources Engineering

- Nuclear Engineering

- Materials Science and Engineering

- Electrical and Computer Engineering

- Naval Architecture and Ocean Engineering

- Computer Science and Engineering

- Aerospace Engineering

- Chemical and Biological Engineering

-

Graduate Programs

- Civil and Environmental Engineering

- Architecture and Architectural Engineering

- Mechanical Engineering

- Industrial Engineering

- Energy Systems Engineering

- Materials Science and Engineering

- Electrical and Computer Engineering

- Naval Architecture and Ocean Engineering

- Computer Science and Engineering

- Chemical and Biological Engineering

- Aerospace Engineering

- Interdisciplinary Program in Technology, Management, Economics and Policy

- Interdisciplinary Program in Urban Design

- Interdisciplinary Program in Bioengineering

- Interdisciplinary Program in Artificial Intelligence

- Interdisciplinary Program in Intelligent Space and Aerospace Systems

- Chemical Convergence for Energy and Environment Major

- Multiscale Mechanics Design Major

- Hybrid Materials Major

- Double Degree Program

- Open Programs

-

Undergraduate Programs

- Campus Life

- Communication

- Prospective Students

- International Office

News

A Research Team Led by Professor Dongjun Lee of SNU College of Engineering Develops Visual-Inertial Skeleton Tracking (VIST) Technology That Accurately Tracks Hand Movements in Various Environments and Tasks

-

Uploaded by

관리자

-

Upload Date

2021.10.19

-

Views

121

A Research Team Led by Professor Dongjun Lee of SNU College of Engineering Develops Visual-Inertial Skeleton Tracking (VIST) Technology That Accurately Tracks Hand Movements in

Various Environments and Tasks

Various Environments and Tasks

- Presented the possibility of using hands and fingers on user interfaces in various industries

- Published on September 29 in the renowned international journal "Science Robotics"

▲ (From left) Dr. Yongseok Lee ((Current) Samsung Research), Researcher Wonkyung Do ((Current) Stanford University), Researcher Hanbyul Yoon ((Current) UCLA), Researcher Jinwook Huh, Researcher Weon-Ha Lee ((Current) Samsung Electronics), Professor Dongjun Lee of Seoul National University College of Engineering, Department of Mechanical Engineering

- Published on September 29 in the renowned international journal "Science Robotics"

▲ (From left) Dr. Yongseok Lee ((Current) Samsung Research), Researcher Wonkyung Do ((Current) Stanford University), Researcher Hanbyul Yoon ((Current) UCLA), Researcher Jinwook Huh, Researcher Weon-Ha Lee ((Current) Samsung Electronics), Professor Dongjun Lee of Seoul National University College of Engineering, Department of Mechanical Engineering

Seoul National University's College of Engineering (Dean Byoungho Lee) announced on September 30 (Thursday) that Professor Dongjun Lee's research team of the Department of Mechanical Engineering has developed visual-inertial skeleton tracking (VIST), a robust and accurate hand motion tracking technology.

This technology has been recognized for research that suggests the possibility of using hands and fingers in user interfaces in various industries, such as virtual/augmented reality, smart factories and rehabilitation, as well as robots, and was published on September 29 in "Science Robotics," a renowned international journal.

The dynamic and elaborate use of hands and fingers is one of the most important characteristics of humans and is an important factor that enriches our interactions with the outside world.

However, in the current user interface used in robot control or virtual reality, it is not possible to utilize the abundant movement of one's hands and fingers as it is done in a three dimensional plane and it is only possible to control movement on the tablet plane or by controlling an avatar with a fist that is holding the controller.

To this end, technology such as image-based hand motion tracking technology using camera and AI, technology that tracks hand movements by measuring finger angles using an inertial measurement unit and a geomagnetic sensor and technology to estimate hand motion by measuring the deformation of a software wearable sensor is being developed but each has fundamental problems such as image occlusion, magnetic disturbance, and signal disturbance due to contact with an object/its environment, making it difficult to be applied to various environments and industries.

The VIST (visual-inertial skeleton tracking) technology, developed by Professor Dongjun Lee's research team of the SNU Department of Mechanical Engineering, is the first technology to solve the fundamental problem of each of these technologies as it is a technology that complementarily fuses the information of 7 inertial sensors and 37 markers equipped onto gloves with the camera on one's head to track hand and finger movements robustly and accurately against frequent image occlusion which occurs frequently when manipulating objects, geomagnetic disturbances that occur near electronic equipment/steel structures and during contact caused by scissors, electric drills, or the wearing of tactile equipment.

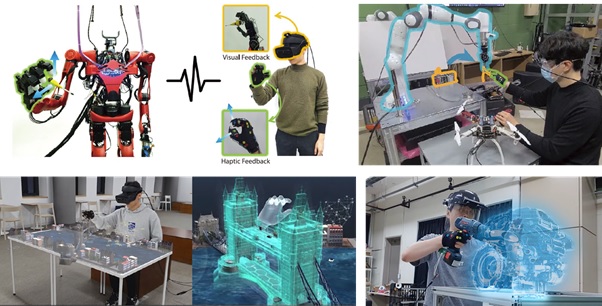

▲ The hand motion tracking VIST system that was developed and the motion conceptual diagram

In particular, for hand gestures in which many fingers move quickly and complicatedly above a small palm, it is very easy for the camera to miss the marker and to fail its tracking but VIST's core technology is

a technology for tracking multiple skeletons based on "tightly-coupled fusion" that simultaneously achieved both robustness and accuracy of hand motion tracking by enhancing marker tracking performance of cameras using inertial sensors and at the same time, correcting the divergence of inertial sensor information.

“The VIST hand motion tracking technology that was developed will enable intuitive and efficient control of robotic hands, cooperative robots, and swarm robots using hands and fingers and at the same time, it is expected to enable natural interaction in the fields of virtual reality, augmented reality, and meta-verses. In particular, compared to existing products, its lighter weight (55g), low price (material cost of$100), high accuracy (tracking error of about 1cm), and durability (washable) makes it possible to say that there's a high possibility of its commercialization,” said Professor Dongjun Lee, the research director.

Meanwhile, this study was conducted with support from the Ministry of Science and ICT's Basic Research Project (middle-level research) and the National Research Foundation of Korea's Leading Research Center.

▲ Examples of motion tracking VIST technology application: remote control, collaborative robot, control, virtual/augmented reality